Querying an Inference Endpoint

After your Inference Endpoint is up and running, you can start querying it to get predictions from the deployed model.

Back in the Inference Endpoints list, you will notice the URL column field of your newly created Inference Endpoint will be populated after a few seconds. This is the base URL of your endpoint.

The API’s path will vary depending on the model you are using. For instance, here’s how you can query TinyLlama/TinyLlama-1.1B-Chat-v1.0 using curl:

curl -X POST https://inference-efcaac4c-c228-43d5-bdcf-f6689feb8747-d5e532c3.flex.ai/v1/completions \ -H "Content-Type: application/json" \ -H "Authorization: Bearer efd6b88e-42de-4db1-ab94-d0f3f7873de1" \ -d '{ "model": "TinyLlama/TinyLlama-1.1B-Chat-v1.0", "prompt": "Why do koalas eat eucalyptus leaves?", "max_tokens": 240 }'A response similar to this will be returned:

{ "id": "cmpl-6bfdc141-4f40-45b0-a241-b9fc9a42b8da", "object": "text_completion", "created": 1755973474, "model": "TinyLlama/TinyLlama-1.1B-Chat-v1.0", "choices": [ { "index": 0, "text": " Eucalyptus is a plant that is specific to tropical regions around the world. The leaves of the eucalyptus are consumed by koals as a source of food. Emus are carnivorous and eat meat and grasses that are not leafy. Koalas don’t have much muscle mass, so they rely on their brains and handlers to eat leaves. Koalas are mainly scavengers and won’t eat fruit, but they can occasionally swallow large parts of bark if they are hungry and their food source is present. They’ve been known to swallow full baskets of fruit in some cases. Emus are carnivorous, meaning they have sharp teeth and a powerful jaw, so they do consume meat. Other animals eat eucalyptus leaves, but emus are the only known diurnal carnivores (carnivores that hunt at dawn or dusk for their food). is found in Australia’s biggest land animal, the koala. How do koalas depend on eucalyptus leaves as a food source? The eucal", "logprobs": null, "finish_reason": "length", "stop_reason": null, "prompt_logprobs": null } ], "usage": { "prompt_tokens": 14, "total_tokens": 254, "completion_tokens": 240, "prompt_tokens_details": null }, "kv_transfer_params": null}Inference Endpoint Details

Section titled “Inference Endpoint Details”You can select the gear icon ⚙️ (labeled as Configure) in the Actions field of the Inference Endpoint list row of your newly created Endpoint to open a detailed overview of the Inference Endpoint deployment.

The Details tab will be opened by default, showing you all the relevant information about your Inference Endpoint.

The Details tab

Section titled “The Details tab”This tab provides you with detailed information about your Inference Endpoint, including:

Summary

Section titled “Summary”| Field | Description |

|---|---|

ID | The unique identifier of the Inference Endpoint. |

Name | The name you assigned to the Inference Endpoint. |

Status | The current status of the Inference Endpoint (e.g., Running, Stopped, etc.). |

URL | The base URL of the Inference Endpoint, which you can use to query the model. |

Playground URL | The URL of the Inference Playground, a user-friendly interface to interact with your deployed model. |

Dashboard URL | The URL of the Inference Endpoint dashboard, where you can monitor the performance and usage of your model. |

Configuration

Section titled “Configuration”| Field | Description |

|---|---|

Device Architecture | The architecture of the device where the Inference Endpoint is running (e.g., nvidia). |

Runtime Args | The vLLM runtime arguments that were used to deploy the Inference Endpoint. These can be customized when creating or updating the Inference Endpoint. |

HF Token Secret Name | The name of the FlexAI Secret that contains the Hugging Face Access Token, if applicable. This is only shown if the Inference Endpoint requires a Hugging Face Access Token to access the model. |

API Key Secret Name | The name of the FlexAI Secret that contains the API Key used to authenticate requests to the Inference Endpoint. |

The Logs tab

Section titled “The Logs tab”The Logs tab provides you with real-time logs from your Inference Endpoint, allowing you to monitor its activity and troubleshoot any issues that may arise.

You can use the Search bar input field to filter the logs by a specific keyword. This is useful to quickly find relevant information in the logs.

The Playground

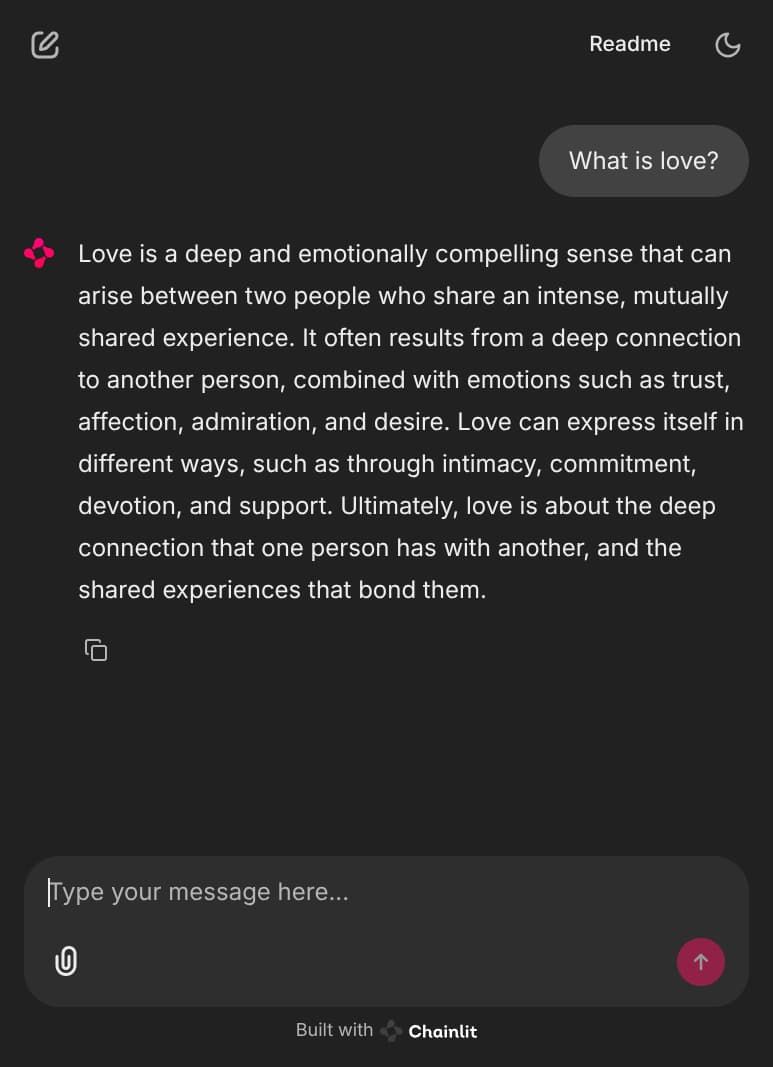

Section titled “The Playground”The FlexAI Inference Playground is a convenient way to interact with your deployed models without needing to write any code —or cURL requests. It allows you to test your Inference Endpoint using a user-friendly interface.

It is a FlexAI-hosted instance of Chainlit 🔗, a UI tool for interacting with multimodal AI models.

You can get the Inference Playground URL by opening the Inference Endpoint’s Details drawer menu. You will find the URL under the default Details tab’s Summary section.